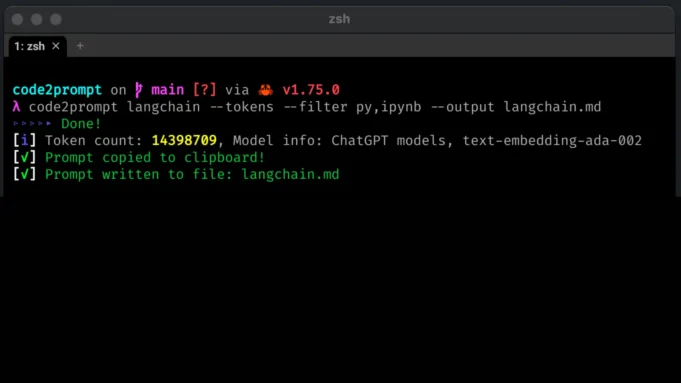

code2prompt is a command-line tool (CLI) that converts your codebase into a single LLM prompt with a source tree, prompt templating, and token counting.

Table Of Contents

- Features

- Installation

- Usage

- Templates

- User Defined Variables

- Tokenizers

- Python SDK

- Contribution

- License

- Support The Author

Features

You can run this tool on the entire directory and it would generate a well-formatted Markdown prompt detailing the source tree structure, and all the code.

You can then upload this document to either GPT or Claude models with higher context windows and ask it to:

- Quickly generate LLM prompts from codebases of any size.

- Customize prompt generation with Handlebars templates. (See the default template)

- Respects

.gitignore(can be disabled with--no-ignore). - Filter and exclude files using glob patterns.

- Control hidden file inclusion with

--hiddenflag. - Display the token count of the generated prompt. (See Tokenizers for more details)

- Optionally include Git diff output (staged files) in the generated prompt.

- Automatically copy the generated prompt to the clipboard.

- Save the generated prompt to an output file.

- Exclude files and folders by name or path.

- Add line numbers to source code blocks.

You can customize the prompt template to achieve any of the desired use cases. It essentially traverses a codebase and creates a prompt with all source files combined.

In short, it automates copy-pasting multiple source files into your prompt and formatting them along with letting you know how many tokens your code consumes.

Installation

Binary Releases

Download the latest binary for your OS from Releases.

Source Build

Requires:

- Git, Rust and Cargo.

git clone https://github.com/mufeedvh/code2prompt.git

cd code2prompt/

cargo build --releasecargo

installs from the crates.io registry.

cargo install code2promptFor unpublished builds:

cargo install --git https://github.com/mufeedvh/code2prompt --forceFor more information click here.