Latest Posts

Free Email Lookup Tools and Reverse Email Search Resources

By 0xSnow on March 6, 2026

Mr.Holmes – A Comprehensive Guide To Installing And Using The OSINT Tool

By 0xSnow on March 6, 2026

Whatweb – A Scanning Tool to Find Security Vulnerabilities in Web App

By 0xSnow on March 6, 2026

Whapa – Comprehensive Guide To The WhatsApp Forensic Toolset

By 0xSnow on March 6, 2026

Trending Posts

Email to Profile: Social Media Search and Free Lookup Tools

By 0xSnow on November 3, 2025

Advanced Free Email Lookup and Reverse Search Techniques

By 0xSnow on November 3, 2025

How to Use Pentest Copilot in Kali Linux

By 0xSnow on November 1, 2025

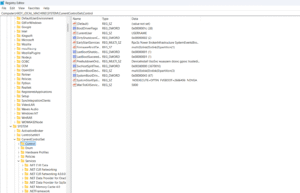

How to Use the Windows Registry to optimize and control your PC.

By Tamilselvan S on October 30, 2025

MQTT Security: Securing IoT Communications

By 0xSnow on October 30, 2025

Whapa – Comprehensive Guide To The WhatsApp Forensic Toolset

By 0xSnow on March 6, 2026

Free Email Lookup Tools and Reverse Email Search Resources

By 0xSnow on March 6, 2026

Whatweb – A Scanning Tool to Find Security Vulnerabilities in Web App

By 0xSnow on March 6, 2026

Mr.Holmes – A Comprehensive Guide To Installing And Using The OSINT Tool

By 0xSnow on March 6, 2026

Microsoft Unveils “Project Helix”- A Next-Gen Xbox Merging Console and PC Gaming

By 0xSnow on March 6, 2026

Latest News

Microsoft Unveils “Project Helix”- A Next-Gen Xbox Merging Console and PC Gaming

By 0xSnow on March 6, 2026

Free Email Lookup Tools and Reverse Email Search Resources

By 0xSnow on March 6, 2026

How AI Puts Data Security at Risk

By 0xSnow on December 13, 2025

The Evolution of Cloud Technology: Where We Started and Where We’re Headed

By 0xSnow on December 9, 2025

.jpg)

The Evolution of Online Finance Tools In a Tech-Driven World

By 0xSnow on December 9, 2025

A Complete Guide to Lenso.ai and Its Reverse Image Search Capabilities

By 0xSnow on December 8, 2025

%20Works.jpg)

How Web Application Firewalls (WAFs) Work

By 0xSnow on November 3, 2025

How to Send POST Requests Using curl in Linux

By 0xSnow on November 3, 2025

What Does chmod 777 Mean in Linux

By 0xSnow on November 3, 2025

How to Undo and Redo in Vim or Vi

By 0xSnow on November 3, 2025

.webp)