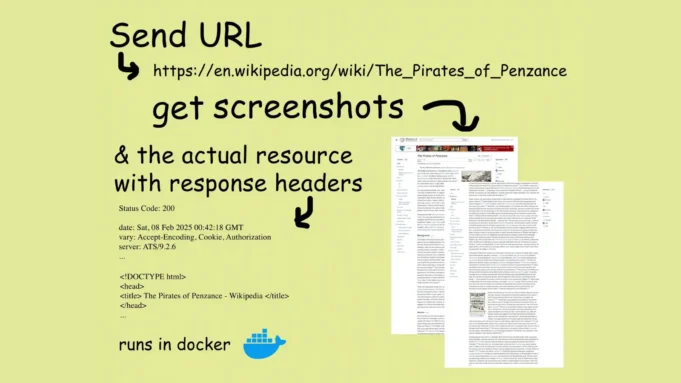

ScrapeServ is a robust and easy-to-use web scraping tool designed to capture website data and screenshots with minimal effort.

Created by Gordon Kamer to support Abbey, an AI platform, ScrapeServ operates as a local API server, enabling users to send a URL and receive website data along with screenshots of the site.

Key Features

- Dynamic Scrolling and Screenshots: ScrapeServ scrolls through web pages and captures screenshots of different sections, ensuring comprehensive visual documentation.

- Browser-Based Execution: It uses Playwright to run websites in a Firefox browser context, fully supporting JavaScript execution.

- HTTP Metadata: Provides HTTP status codes, headers, and metadata from the first request.

- Redirects and Downloads: Automatically handles redirects and processes download links effectively.

- Task Management: Implements a queue system with configurable memory allocation for efficient task processing.

- Blocking API: Ensures tasks are completed sequentially without additional complexity.

- Containerized Deployment: Runs in an isolated Docker container for ease of setup and enhanced security.

To use ScrapeServ:

- Install Docker and Docker Compose.

- Clone the repository from GitHub.

- Run

docker compose upto start the server athttp://localhost:5006.

ScrapeServ offers flexibility for integration:

- API Interaction: Send JSON-formatted POST requests to the

/scrapeendpoint with parameters likeurl,browser_dim,wait, andmax_screenshots. - Command Line Access: Use tools like

curlandripmimeto interact with the API from Mac/Linux terminals.

The /scrape endpoint returns:

- A multipart response containing request metadata, website data (HTML), and up to 5 screenshots (JPEG, PNG, or WebP formats).

- Error messages in JSON format for failed requests.

ScrapeServ prioritizes safety by:

- Running each task in an isolated browser context within a Docker container.

- Enforcing strict memory limits, timeouts, and URL validation.

For enhanced security, users can implement API keys via .env files or deploy the service on isolated virtual machines.

ScrapeServ is ideal for developers seeking high-quality web scraping with minimal configuration. Its ability to render JavaScript-heavy websites and provide detailed outputs makes it a superior choice for modern scraping needs.