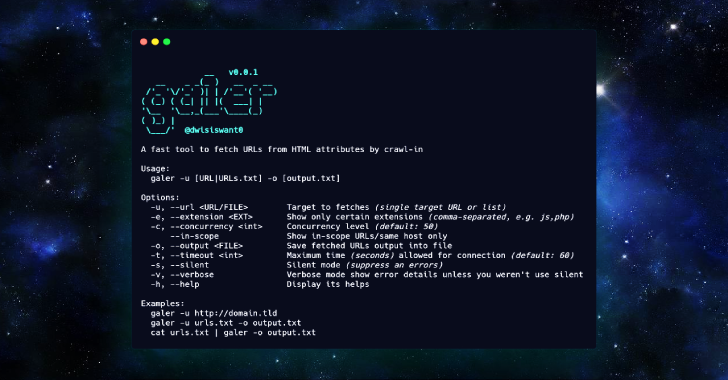

Galer is a fast tool to fetch URLs from HTML attributes by crawl-in. Inspired by the @omespino Tweet, which is possible to extract src, href, url and action values by evaluating JavaScript through Chrome DevTools Protocol.

Installation

- From Binary

The installation is easy. You can download a prebuilt binary from releases page, unpack and run! or with

(sudo) curl -sSfL https://git.io/galer | sh -s — -b /usr/local/bin

- From Source

If you have go1.15+ compiler installed and configured:

GO111MODULE=on go get github.com/dwisiswant0/galer

- From GitHub

git clone https://github.com/dwisiswant0/galer

cd galer

go build .

(sudo) mv galer /usr/local/bin

Usage

- Basic Usage

- Simply, galer can be run with:

galer -u “http://domain.tld”

- Flags

galer -h

This will display help for the tool. Here are all the switches it supports.

| Flag | Description |

|---|---|

| -u, –url | Target to fetches (single target URL or list) |

| -e, –extension | Show only certain extensions (comma-separated, e.g. js,php) |

| -c, –concurrency | Concurrency level (default: 50) |

| –in-scope | Show in-scope URLs/same host only |

| -o, –output | Save fetched URLs output into file |

| -t, –timeout | Maximum time (seconds) allowed for connection (default: 60) |

| -s, –silent | Silent mode (suppress an errors) |

| -v, –verbose | Verbose mode show error details unless you weren’t use silent |

| -h, –help | Display its helps |

Examples

- Single URL

galer -u “http://domain.tld”

- URLs from list

galer -u /path/to/urls.txt

- From Stdin

cat urls.txt | galer

- In case you want to chained with other tools:

subfinder -d domain.tld -silent | httpx -silent | galer

Library

You can use galer as library.

go get github.com/dwisiswant0/galer/pkg/galer

For example:

package main

import (

"fmt"

"github.com/dwisiswant0/galer/pkg/galer"

)

func main() {

cfg := &galer.Config{

Timeout: 60,

}

cfg = galer.New(cfg)

run, err := cfg.Crawl("https://twitter.com")

if err != nil {

panic(err)

}

for _, url := range run {

fmt.Println(url)

}

}

TODOs

- Enable to set extra HTTP headers

- Provide randomly User-Agent

- Bypass headless browser

- Add exception for specific extensions