Most Important Security Tips to Protect Your Website From Hackers

Do you think they need your date? Do you think they need access to your credit cards? There is something more valuable for hackers than you think. One of the main targets of modern hackers is to get access to your servers. It allows them to use it as an email relay for spam. But what else they can do...

Automatic API Attack Tool 2019

Automatic API Attack Tool is a imperva's customizable API attack tool takes an API specification as an input, generates and runs attacks that are based on it as an output. Automatic API Attack tool is able to parse an API specification and create fuzzing attack scenarios based on what is defined in the API specification. Each endpoint...

Silver : Mass Scan IPs For Vulnerable Services

masscan is fast, nmap can fingerprint software and vulners is a huge vulnerability database. Silver is a front-end that allows complete utilization of these programs by parsing data, spawning parallel processes, caching vulnerability data for faster scanning over time and much more. Features Resumable scanningSlack notificationsmulti-core utilizationVulnerability data cachingSmart Shodan integration* *Shodan integration is optional but when linked, it can ...

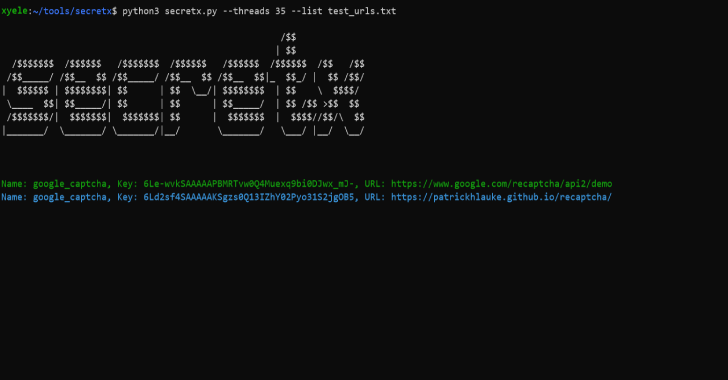

Secretx : Extracting API keys & Secrets By Requesting Each URL At The Your List

Secretx is a tool which is mainly used for extracting api keys and secrets by requesting each url at the your list. Installation python3 -m pip install -r requirements.txt Also Read - Exist : Web App For Aggregating & Analyzing Cyber Threat Intelligence Usage python3 secretx.py --list urlList.txt --threads 15 optional arguments: --help --colorless Download

ReconCobra : Complete Automated Pentest Framework For Information Gathering

ReconCobra is a complete Automated pentest framework for Information Gathering and it will tested on Kali, Parrot OS, Black Arch, Termux, Android Led TV. Introduction It is useful in Banks, Private Organizations and Ethical hacker personnel for legal auditing.It serves as a defense method to find as much as information possible for gaining unauthorized access and intrusion.With the emergence of more...

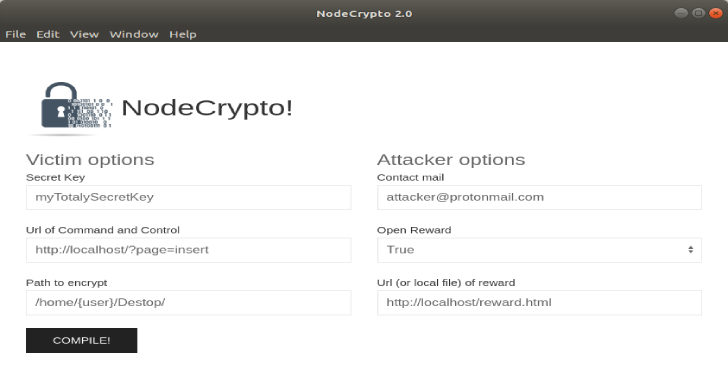

NodeCrypto : Linux Ransomware Written In NodeJs

NodeCrypto is a Linux Ransomware written in NodeJs that encrypt predefined files. This project was created for educational purposes, you are the sole responsible for the use of it. Install Server Upload all file of server/ folder on your webserver.Create a sql database and import sql/nodeCrypto.sqlEdit server/libs/db.php and add your SQL ID. Install & Run git clone https://github.com/atmoner/nodeCrypto.gitcd nodeCrypto && npm installcd...

PBTK : A Toolset For Reverse Engineering & Fuzzing Protobuf-Based Apps

PBTK is a tool that can be used for reverse engineering and fuzzing protobuf based application. Protobuf is a serialization format developed by Google and used in an increasing number of Android, web, desktop and more applications. It consists of a language for declaring data structures, which is then compiled to code or another kind of structure depending...

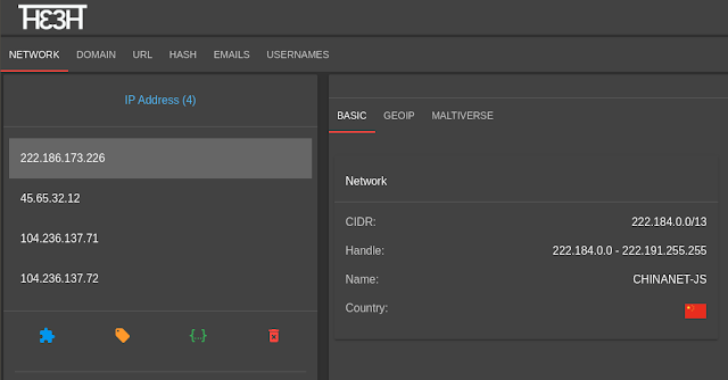

theTHE : The Threat Hunting Environment

You are a Threat Hunter. While investigating, did you find yourself with more than 20 tabs opened in your browser, scattered .txt files with data and some terminals showing up in the background? theTHE centralizes all the information on an investigation in a single project and shares its results with your team (and with nobody ...

Exist : Web App For Aggregating & Analyzing Cyber Threat Intelligence

EXIST is a web application for aggregating and analyzing CTI (cyber threat intelligence). It is written by the following software. Python 3.5.4Django 1.11.22 It automatically fetches data from several CTI services and Twitter via their APIs and feeds. You can cross-search indicators via the web interface and the API. If you have servers logging network behaviors of clients (e.g., logs of...

Nginx Log Check : Nginx Log Security Analysis Script

Nginx Log Check is a nothing but a Nginx Log Security Analysis Script. Following are some of the feature for the script for Nginx log security check; Statistics Top 20 AddressSQL injection analysisScanner alert analysisExploit detectionSensitive path accessFile contains attackWebshellFind URLs with response length Top 20Looking for rare script file accessFind script file for 302 redirect Also Read - Exploitivator :...