Promptfoo is an innovative, developer-friendly tool designed to streamline the development and testing of Large Language Model (LLM) applications.

It offers a comprehensive suite of features to evaluate, secure, and optimize LLMs, helping developers transition from a trial-and-error approach to a more structured and reliable development process.

Key Features Of Promptfoo

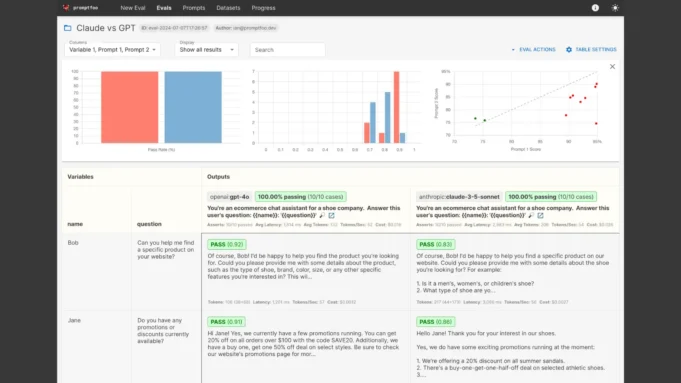

- Automated Evaluations: Promptfoo allows developers to test their prompts and models through automated evaluations, providing insights into how well their LLMs perform under various conditions.

- Red Teaming and Vulnerability Scanning: It includes robust red teaming capabilities to identify vulnerabilities in LLM applications, ensuring they are secure against potential threats.

- Model Comparison: Developers can compare different models side-by-side, including popular options like OpenAI, Anthropic, Azure, Bedrock, and Ollama, to choose the best fit for their applications.

- CI/CD Integration: Promptfoo supports automation in Continuous Integration/Continuous Deployment (CI/CD) pipelines, streamlining the development process.

- Collaboration Tools: Results can be easily shared with team members, facilitating collaboration and decision-making.

Benefits Of Using Promptfoo

- Developer-First Approach: Promptfoo is designed with developers in mind, offering features like live reload and caching for faster development.

- Privacy: It runs entirely locally, ensuring that sensitive prompts never leave the developer’s machine.

- Flexibility: Compatible with any LLM API and programming language, making it versatile for various development environments.

- Battle-Tested: Proven to power LLM applications serving over 10 million users in production.

- Data-Driven Decisions: Provides metrics-based insights to guide development decisions.

- Open Source: Licensed under MIT, with an active community contributing to its development.

Getting Started With Promptfoo

To begin using Promptfoo, developers can install and initialize the project using npx promptfoo@latest init, followed by running their first evaluation with npx promptfoo eval.

The tool offers comprehensive documentation and guides for both evaluations and red teaming, making it accessible for developers to dive in and start optimizing their LLM applications.